Illustration: Marcelle Louw for GIJN

Editor’s Note: This post is the final installment from GIJN’s forthcoming Reporter’s Guide to Investigating Digital Threats. Part one, on disinformation, part two, on digital infrastructure, and part three, on digital threats, have already been published. The guide will be released in full in September 2023 at the Global Investigative Journalism Conference.

When we navigate social media, chat rooms, online communities, and other platforms, we often encounter people who antagonize or provoke others into an emotional response. They’re called internet trolls.

Investigative journalists need to learn how to identify trolling, and whether it’s an isolated event or part of a harassment campaign.Internet users often point to any controversial opinion or disagreeable interaction as trolling. It can be difficult to prove that someone is deliberately trolling, but nowadays it’s common to find pseudonymous or anonymous accounts using trolling techniques as part of harassment campaigns. They lob insults and slurs, spread false or misleading information, or disrupt genuine conversations with offensive or nonsensical memes. The goal is to annoy, to malign, to push a particular message, and to negatively affect the experience of a person or specific group. Investigative journalists need to learn how to identify trolling, and whether it’s an isolated event or part of a harassment campaign.

Trolling as Business Model

In order to identify, analyze, and expose trolls and trolling campaigns, we need to understand how trolling became a business model.

In the late 1980s and early 90s, the term trolling was used to define the behavior of users and mock new arrivals — it was part of internet culture. Online communities such as 4chan and other internet forums emerged where trolling was part of the expected behavior. When social media arrived, some users were familiar with the behavior of online trolls. But many weren’t, and got their first taste of this subculture. As the internet expanded, trolling intersected with extreme social behaviors, including bullying and stalking, and was used by those suffering from psychological disorders.

Eventually, governments and political officials “weaponized” trolling to attack their enemies, the opposition, and the media. In 2014, there was a leak of emails that exposed the creation of an army of internet trolls by the Chinese regime — the so-called 50 Cent Army employed at least 500,000 internet trolls to leave fake comments on news articles and social media. By 2017, the estimate of the army increased to two million people. The goal of that massive operation was to drown out conversations and information deemed undesirable to the regime while cheerleading for the government and Chinese Communist Party.

Trolling has evolved to become a component of information operations and military strategies.As other astroturfing operations were exposed as part of cyber warfare, more research and reporting started to be published. In 2016, Latvia faced a form of hybrid warfare from Russia that featured online trolling. In a NATO report about the Lavian case, analysts remarked on the difference between classic trolls (managed by one or more humans) and hybrid trolls (partially automated and partially managed by humans).

During the US elections in 2016, the Russian Internet Research Agency (IRA) waged an aggressive campaign to disrupt the US election using fake social media accounts that trolled Hillary Clinton and her supporters. Researchers also documented how the IRA became a part of the Kremli’s electoral interference playbook targeting elections around the world. In 2018, the IRA was charged by US federal prosecutors with interfering in the US political system through, essentially, trolling and disinformation. Trolling has taken root in other countries’ political systems. In the Philippines, it’s common for campaigns to hire marketing agencies that specialize in using trolls to spread propaganda and attack the opposition.

Trolling has evolved to become a component of information operations and military strategies. Troll farms and troll factories in small European countries and parts of Asia can produce fake content at scale. A similar model exists in Latin America, where digital agencies create troll factories for electoral purposes, as seen in Mexico’s last presidential election. Today, professional trolling is a global business.

How Trolls Gain Reach

Not every troll is the same, but there are some consistent behaviors — and tactics — they employ. The most common are:

- Amplification through social media: Trolls use social media platforms to spread their messages, often creating multiple accounts or employing automated bots to amplify their content. By generating likes, shares, and comments, trolls can manipulate platform algorithms to make their content more visible and reach larger audiences.

- Hashtag hijacking: Trolls monitor trending hashtags or create their own to spread disinformation or harass targeted individuals. This enables them to inject their messages into popular conversations and gain more exposure.

- Emotional manipulation: Trolls often use emotionally charged language and provocative content to elicit strong reactions from users. So-called rage farming is very common.

- Astroturfing: Trolls create the illusion of grassroots support for a particular cause, idea, or narrative by coordinating a large number of fake accounts. This tactic can make their disinformation campaigns appear more credible, and create the aura of popular support.

- Targeting influential individuals: Trolls may target celebrities, politicians, or high-profile individuals to capitalize on their followings and gain more attention.

- Creating and spreading memes: Trolls use humorous or provocative memes to spread disinformation or offensive content. Memes go viral quickly, reaching large audiences.

- Deepfakes and manipulated media: Trolls may use advanced technology to create fake videos, images, or audio recordings to spread disinformation, discredit individuals, or fuel conspiracy theories.

- Exploiting existing divisions: Trolls often capitalize on societal divisions, such as political, religious, or cultural differences, to amplify discord and polarize online discussions.

Trolls and Harassment Campaigns

To understand how troll factories work and how they behave in harassment campaigns, we need to identify their modus operandi and their methods.

Troll factories produce fake social media accounts and websites to spread their narratives. Trolls also target specific platforms and communities to amplify their narrative. In many ways, they operate like any digital marketing agency, creating messages and content and strategically targeting them across channels to achieve their goals.

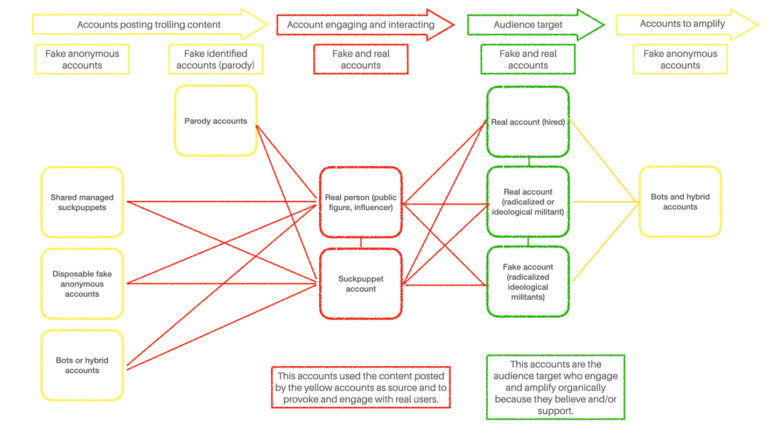

There are two ways to understand their activity as part of a harassment campaign: the role as part of an amplification ecosystem, and their role in an attack operation.

Amplification Ecosystem

An amplification ecosystem is the inventory of assets, accounts, channels, pages, or spaces used to disseminate and amplify messages part of any strategy. This graphic shows an example of the elements of a media amplification ecosystem used to manipulate public opinion. Every strategy would require a different one.

Different elements of a trolling campaign’s amplification ecosystem. Image: Courtesy of Luis Assardo

Attack Operation

The following flowchart shows us a basic operation on social media to spread a narrative.

- Setting the content: What we first see is a group of diverse disposable fake accounts (in yellow) posting trolling content (fabricated, false, doctored, etc). Those accounts could have no engagement; their purpose is to post content that later will be sent to larger audiences.

- That content will be pulled and distributed to specific audiences (mostly supporters or radicalized users) by the group of accounts in red (trolls and public figures). Those red accounts provoke adversaries and engage with supporters using the content. In case the platform (Twitter or Meta) receives reports for that content, the red ones will be avoiding any problem or suspension since they “only” shared something posted by other accounts. In any case, the yellow accounts are the ones exposed to suspension. This is how trolls avoid being shut down.

- Once the provocation and/or engagement occurs and reaches the accounts in the green group it will spread organically and reach a larger audience. After that happens, another set of accounts in yellow (or the same if they are not suspended yet) will amplify the posts from the green accounts.

Flow chart of the rollout strategy of a trolling campaign. Image: Courtesy of Luis Assardo

How Investigative Journalists Can Identify and Analyze Trolls

There are two main steps in investigating trolls: detection and analysis.

Detection

In the detection phase, it is crucial to monitor and identify suspicious patterns of behavior or content, such as coordinated posting, repetitive messaging, or a sudden growth of negative comments or interactions. To do this we need social listening and social monitoring tools. They allow us to track social media platforms, online forums, and other digital spaces where trolls may be active. Also it is important to create a secure channel to receive information from any internet user who may be under attack. Any sign of targeted attacks, doxxing, or threats, can help in early identification of a potential campaign.

Analysis

During the analysis phase, journalists should examine the content, timing, and origin of the suspicious activity to determine if it is part of a coordinated campaign. This involves exploring the messages for consistency, analyzing the profiles involved (including account creation dates, posting patterns, and connections to other known troll accounts via interactions or hashtags), and evaluating the potential impact of the campaign on targeted individuals or communities.

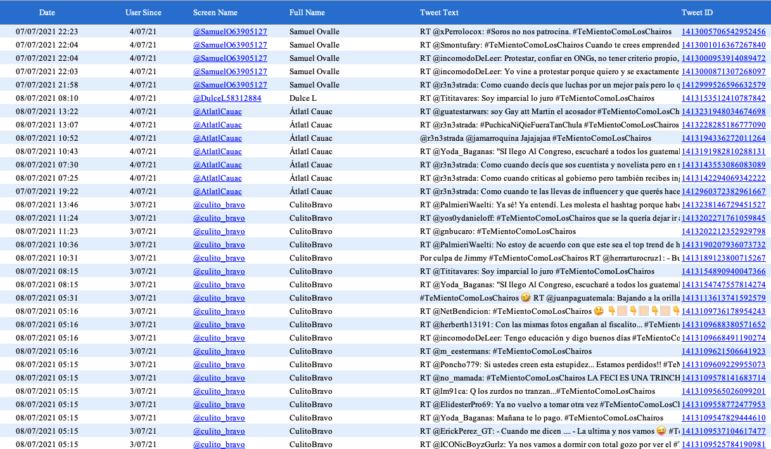

Here is an example of tweets from accounts created to amplify messages. Those Tweets show recently (in less than a 24-hour period) created accounts retweeting every account that includes the hashtag they are working on. And those were part of a larger network of more than 200 accounts doing the same. With specific tools such as Twitter Archiver, Tweet Archivist, and any other used to download tweets we can spot accounts created the same day and hour, sharing the same messages and high intensity activity in the same periods of time.

A spreadsheet tracking a flock of Twitter accounts being used in a trolling campaign. Image: Courtesy of Luis Assardo

This methodology outlines a process you can use to detect and analyze potential troll accounts.

- Monitor social media platforms for suspicious activity or sudden changes in the tone or content of online discussions.

- Use social listening tools, like Brandwatch, Meltwater, Brand24, Talkwalker, etc. to track specific keywords, hashtags, or phrases that may be associated with trolling or harassment campaigns.

- Use network analysis tools, such as Graphext, Graphistry, Gephi, or NodeXL, to visualize connections between accounts and identify potential coordination.

- Analyze the content of messages for patterns, connections, or signs of disinformation.

- Investigate the profiles of potential trolls, including account creation dates, posting patterns, and connections to known troll accounts.

- Collaborate with other journalists, disinformation researchers, or cybersecurity experts to verify findings and gain additional insights.

- Document and preserve data using screenshots and public archives like the Wayback Machine.

- Report on identified campaigns to raise awareness and help mitigate their impact.

There are also tools that can be utilized for identifying, analyzing, and visualizing trolling campaigns.

- Social listening tools: Brandwatch, Meltwater, Brand24, Talkwalker, or Mention.

- Network analysis tools: Graphext, Graphistry, Gephi, NodeXL, or UCINet (Windows only).

- Data visualization tools: Tableau, D3.js, or Google Data Studio.

- Open-source intelligence (OSINT) tools: Maltego, TweetDeck, or Google Advanced Search.

- Fact-checking references: Snopes, FactCheck.org, or Full Fact.

- Terms of Service, rules, and policies of the social media platforms (Twitter, Facebook, TikTok, etc.).

- Digital security tools to protect your data and communications. Use encryption to protect your findings.

Trolling is typically executed by accounts using a real name or identity, or those that use a fake or pseudonymous name, as shown in this table:

| Types of accounts | Identified (real name) | Anonymous/Pseudonymous |

| Real | Real person clearly identified. | Real person using a non-identified account. |

| Fake | Bots, impersonation and parody accounts identified, usually for services or entertainment. | Bots, sock puppet accounts, and parody accounts to spread disinformation or harass. |

Exposing Trolls: Best Practices for Sharing Your Findings

Any report on trolling should include four basic items: modus operandi, type of content used, intentionality (if proven), and attribution (if proven).

First, it is important to provide context and background information about the harassment or trolling campaign. This includes explaining the origin and motivations behind the campaign, the targeted individuals or communities, and any potential connections to broader social or political issues. Providing this context helps readers understand the significance and potential consequences of the campaign. It is very important to avoid sensationalizing it.

You should include the methodology and evidence supporting the identification and analysis of the campaign you are exposing. Explain the tools, techniques, and data sources used in the research, while maintaining a clear and objective tone. A transparent analysis helps establish the credibility of your reporting and provides a solid foundation for public understanding.

Also consider the ethical implications of your reporting. Be cautious not to amplify the messages or tactics of the trolls or harassers by reproducing offensive content or amplifying their content. This could be their goal. Always protect the privacy and safety of the targeted individuals by not sharing sensitive personal information or images without their consent.

Here’s a breakdown of information to include in a report on harassment campaigns and trolling.

- Context and background information about the campaign.

- The targeted individuals or communities.

- Motivations and objectives of the harassers or trolls.

- Evidence of coordination or organized efforts.

- Methodology and tools used for identification and analysis.

- Quantitative and qualitative data, such as the volume of messages or the severity of harassment.

- Potential consequences and impact on targeted individuals or communities.

- Connections to broader social or political issues, if relevant.

- Any actions taken by social media platforms, law enforcement, or other relevant parties to address the issue.

Investigative journalists should avoid these elements when reporting.

- Sensationalizing or exaggerating the impact of the campaign.

- Reproducing offensive or harmful content without a clear journalistic purpose.

- Giving undue attention to the goals or messages of the trolls or harassers.

- Violating the privacy or safety of targeted individuals by sharing sensitive information or images without consent.

- Speculating about the motivations or identities of the harassers or trolls without sufficient evidence.

Case Studies

We know their origins, their strategies, and their tactics, but what does a trolling campaign look like? These examples show well-funded campaigns that include massive amounts of content and involve thousands of mostly fake accounts.

Saudis’ Image Makers: A Troll Army and a Twitter Insider — The New York Times

Jamal Khashoggi was a Saudi dissident journalist assassinated in 2018. Before that, he dealt with a massive trolling campaign trying to silence him, as well as other critics of Saudi Arabia. They used a state-backed network to spread disinformation and smear campaigns. This is a good example of how tracking troll campaigns and traditional reporting with sources can expose big disinformation operations, the resources used, and who is behind them.

Maria Ressa: Fighting an Onslaught of Online Violence — International Center for Journalists (ICFJ)

The ICFJ analyzed over 400,000 tweets and 57,000 public Facebook posts and comments published over a five-year period as part of a trolling campaign against Rappler CEO, Maria Ressa, a journalist who won the Nobel Peace Prize Award in 2021. Using data gathering and visualization tools, the reporting team managed to understand how the attackers were operating, and could identify resources and authors. Here, the key factor is the behavior exposed. The level of violence describes, once attributed, the people behind the attacks.

Rana Ayyub: Targeted Online Violence at the Intersection of Misogyny and Islamophobia — ICFJ

Rana Ayyub is an award-winning investigative reporter and a Washington Post columnist. Her comments about violence and human rights abuses in India led to a trolling campaign that included assaults on her credibility, as well as sexist and misogynistic threats. The researchers recreated a timeline of attacks over many years and analyzed around 13 million tweets and content from traditional media involved in the amplification.

From Trump Supporters to a Human Rights Attorney: The Digital Influencers who Harassed a Journalist — Forbidden Stories

In February 2023, Forbidden Stories published a detailed investigation called Story Killers. Part of the investigation exposed an operation that included trolling against Ghada Oueiss, a veteran journalist working for Al Jazeera. The journalists who investigated the case tracked down the main profiles involved in the harassment campaign and cross references to the networks involved.

Additional Resources

Investigating the Digital Threat Landscape

Investigating Digital Threats: Disinformation

Investigating Digital Threats: Digital Infrastructure

Luis Assardo is a freelance digital security trainer, open source researcher and data-investigative journalist, and founder of Confirmado, a Guatemalan project to fight disinformation. He has won awards for his investigations and research about troll factories, disinformation, radicalization, hate speech, and influence operations. Based in Berlin, he works with Reporters Without Borders (RSF), the Holistic Protection Collective (HPC), and other human rights organizations.

Luis Assardo is a freelance digital security trainer, open source researcher and data-investigative journalist, and founder of Confirmado, a Guatemalan project to fight disinformation. He has won awards for his investigations and research about troll factories, disinformation, radicalization, hate speech, and influence operations. Based in Berlin, he works with Reporters Without Borders (RSF), the Holistic Protection Collective (HPC), and other human rights organizations.

The post Investigating Digital Threats: Trolling Campaigns appeared first on Global Investigative Journalism Network.

1 year ago

629

1 year ago

629